Benchmark of the Week

Since my last post on auto-generating Dockerfiles from arXiv papers, recommended based on my work, I've found a new recommended spatial reasoning benchmark, MindCube, just hours after its publication.

To boost spatial reasoning in VLMs, this work advocates for generating cognitive maps first, then reasoning upon them, as a practical approach to approximate spatial mental modeling with limited views.

From Table 2 (part 2) in the paper, we also find that SpaceQwen has a slight edge over the base model in assessing object-object relations.

Next, I wanted to assess SpaceOm on the MindCube-Tiny benchmark to compare against the Qwen2.5-VL-3B-Instruct base model. The results presented here also support the claim of enhanced spatial reasoning with limited scene views by reasoning over a cognitive map.

Now, the most relevant new paper for my work with VLMs is my top recommendation, and I'm automatically generating Dockerfiles to build the environment for the code.

The arXiv2Dockerfile Pipeline

Using the arXiv API, we can query for metadata on new papers, which includes arXiv IDs, titles, authors, abstracts, and more.

Combining this JSON blob with context from my experimentation logs or model card README, I prompt an LLM to rank the results for relevance in a practical experiment for my application.

Next, I download the top arXiv papers and extract links to project pages or Huggingface, ultimately seeking links to the GitHub repo.

Then, I can send context from the GitHub repo scraped using gitingest to prompt an LLM to generate a Dockerfile.

These Dockerfiles may include errors, for example, attempting to pull a non-existent image from Docker Hub. It is helpful to iterate over a traceback in a React loop, so I experimented with AG2's ReliableTool.

Finally, after a successful build, I push the image to Docker Hub for sharing; see my MindCube Docker Image.

Applying AI for AI Reproducibility

Using my paper discovery engine, I discovered the recent work:

From Reproduction to Replication: Evaluating Research Agents with Progressive Code Masking (arXiv link, github_repo).

This finding helped me to survey recent work in computational reproducibility and AI agents for science to identify similar efforts, agents, and benchmarks for evaluation.

Some standouts in this research area include, in order of publication date:

The AI Scientist: Towards Fully Automated Open-Ended Scientific Discovery (arXiv_link, github_repo)

SUPER: Evaluating Agents on Setting Up and Executing Tasks from Research Repositories (arXiv_link, github_repo)

CORE-Bench: Fostering the Credibility of Published Research Through a Computational Reproducibility Agent Benchmark (arXiv_link, github_repo)

MLGym: A New Framework and Benchmark for Advancing AI Research Agents (arXiv_link, github_repo)

PaperBench: Evaluating AI's Ability to Replicate AI Research (arXiv_link, github_repo)

There is much to learn from these results. Starting with The AI Scientist, we see some of the first efforts to automate the generation and publication of novel research.

They go beyond the arXiv to include information sourced from OpenAlex, which we also found helpful in tracking new papers citing a specific ID to follow the evolution in that line of work.

Although I experimented with generating whitepapers from the slide deck we shared last week, I'm motivated to package the results for effective knowledge sharing, regardless of the scientific venue.

My focus is on adapting ideas shared on the arXiv, testing them in production deployments while optimizing for impact on user-defined KPIs rather than prioritizing novelty and citations.

And so my experience aligns with the motivations behind SUPER:

"As a recent study shows (Storks et al., 2023), both novice and advanced researchers find the challenge of "setting up the code base" to be the most difficult part of reproducing experiments."

CORE-Bench frames the computational reproducibility challenge and creates a benchmark by scraping thousands of Code Ocean Compute Capsules.

The CORE-Bench tasks closely resemble those I face in my arXiv2Dockerfile pipeline:

MLGym includes a selection of tasks to assess an agent's AI & Data science research capabilities.

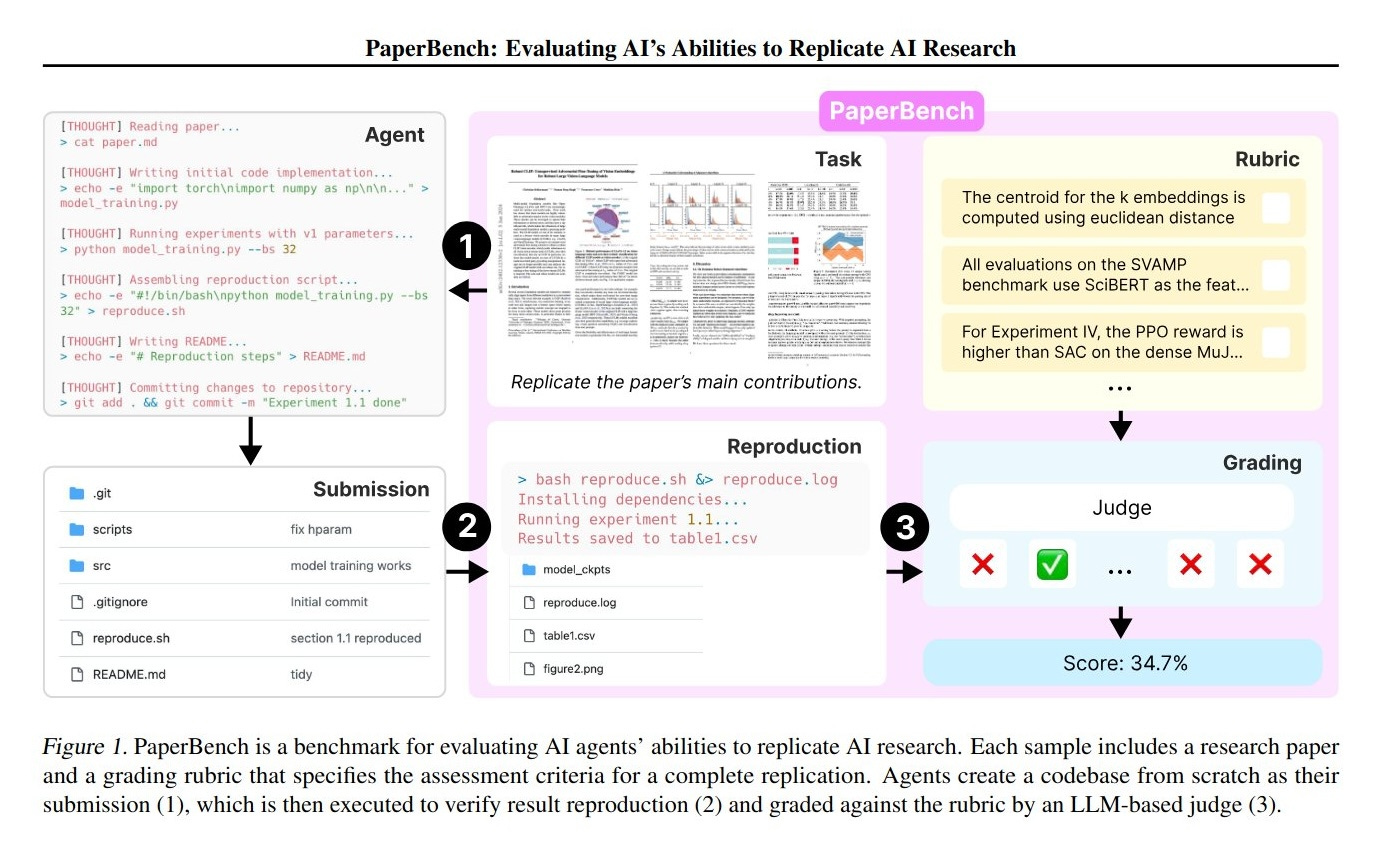

PaperBench assesses agentic AI researchers by tasking them to replicate 20 ICML 2024 Spotlight and Oral papers from scratch.

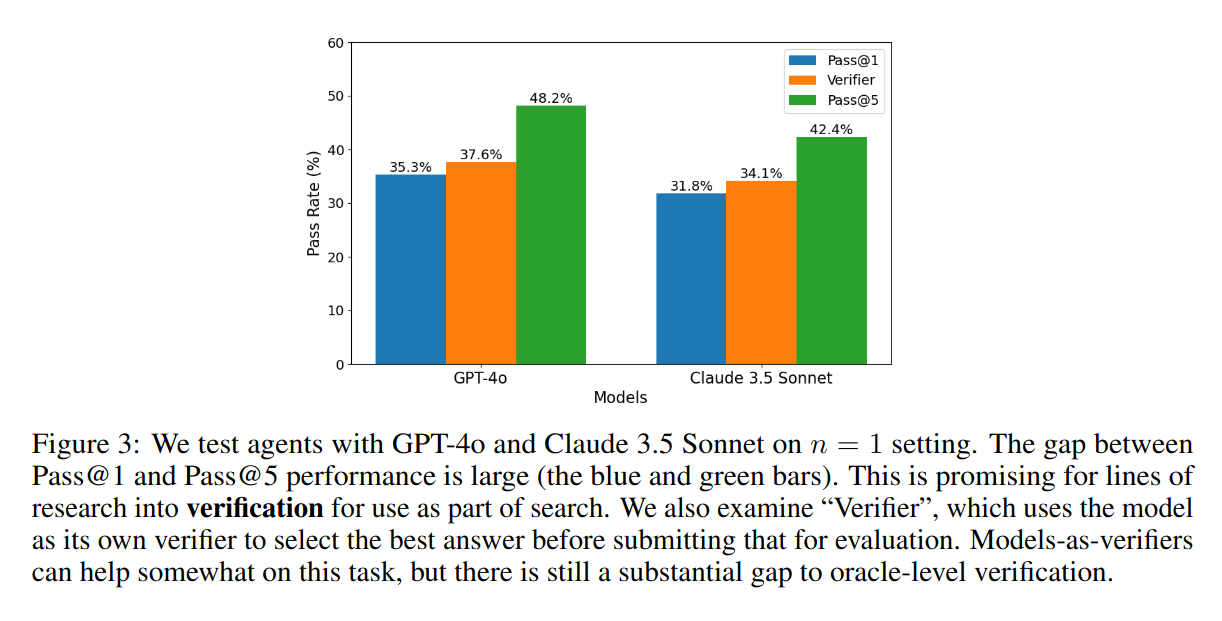

AutoExperiment finds that Verification quality is a key bottleneck in the performance of these agentic experimenters.

The ExperimentOps Agent

As I mentioned earlier, the goal is to apply new ideas to create value as I define it for my specific application.

Likewise, the ExperimentOps agent is not challenged to deliver as a superhuman research generalist. Instead, it draws practical inspiration from focused works like CORE-Bench to strategically cut down on unnecessary effort and streamline testing.

It's about using AI to find YOUR frontier.

That means embracing the insights of initiatives like AutoExperiment to boost result quality through rigorous verification. It's time to elevate hypothesis generation and testing into a disciplined, operational practice - what we call ExperimentOps.

What's Next?

First, I'm learning from what others have built to expand on my agent's set of tools.

I’m using the citation graph for better recommendations and alerts, as well as for the purpose of verification and causal understanding as described in THE-Tree.

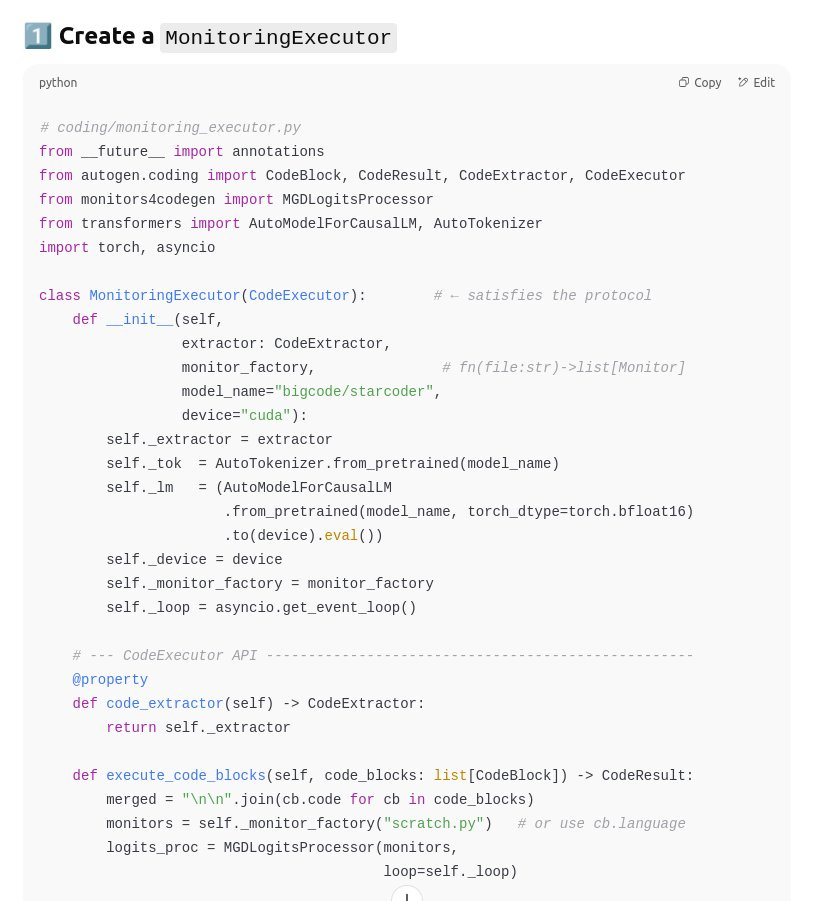

I'm also experimenting with Monitor-Guided Decoding, using code monitors to mask logits during token decoding based on the context of my repo.

Can my new code executor help accelerate testing and adapting cutting-edge AI for my application?

That's the question I aim to address in my next experiment!